Collecting and Enriching Data by Leveraging Snowplow for Deep Analytics

Mar 29, 2018

Every second of every day, many more data points are created in our online world. Making sense of all of the data and trying to find a singular piece of information that is useful, let alone actionable, is a daunting task. In the fast-moving world of news and media, being able to identify pertinent information out of all of the noise is not just important but a necessity.

A large company in the global news space discovered this firsthand as they attempted to stand up a beta news website. With thousands of raw data the website was obtaining each second, it was clear that their custom-built application was not up to the task of identifying or sifting through the raw data, cleansing it, or enriching it in a way that would be useful for the consumer of the information.

The company approached ClearScale, an AWS Premier Consulting Partner, asking them to evaluate Snowplow Analytics to see if it was a suitable solution and, if so, how they could implement it in a way that would complement their beta news website. ClearScale immediately set about analyzing the client’s current implementation, their requirements, and then Snowplow to see how they could provide the desired outcome.

The ClearScale Solution: Using Snowplow Analytics to Collect and Enrich Data

Snowplow is a scalable and flexible application that allows for separate data collectors and data enrichers. Collecting data is a relatively simple process to set up, but when it comes to cleansing and enriching the data it becomes more challenging. Depending on the data being enriched or how in-demand the enrichment requests are, traditional cleansing approaches are resource-intensive, requiring extensive periods of time to perform the operations before the next request can start.

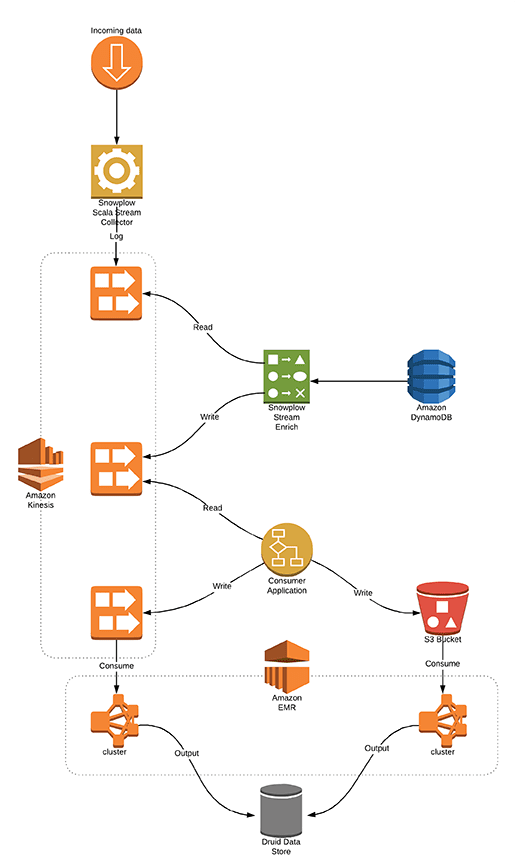

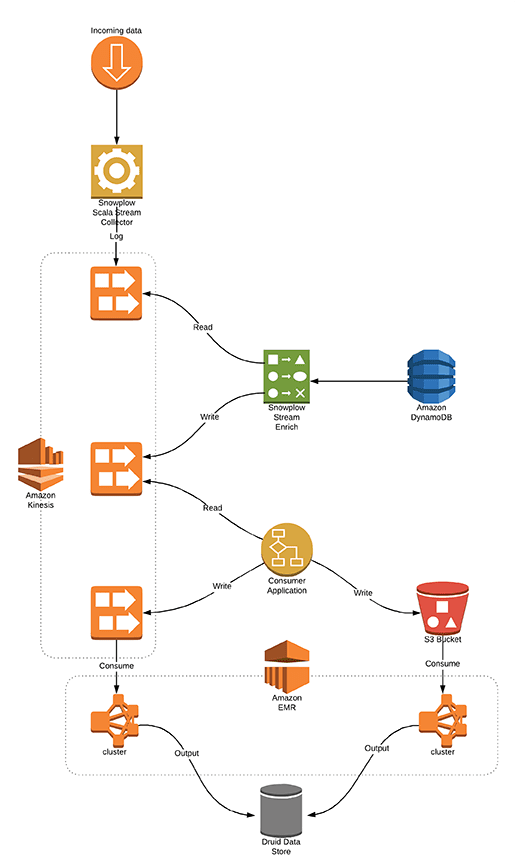

With Snowplow, a customer can leverage its native AWS implementation to recognize economies of scale. By implementing AWS Kinesis, Snowplow can obtain a steady stream of fresh data and analyze it as it comes in. The data is streamed from the beta news website. The collector analyzes it to determine what data is good and can move onto enrich process, and which data is bad and is weeded out. Good data is then streamed in Kinesis and undergoes an enrichment process and those data sets that have been successfully enriched are then committed to S3 for batch data flow and to Kinesis for real-time data flow. Two separate EMR clusters were created for these workloads, both leveraging Apache Spark and one of them leveraging Spark Streaming extension. Outputs are then stored on Druid data store for use in data analysis tools, in reporting, or accessible via the web.

Collector and Enrich Logical Diagram

It is important to note that in order to accomplish this successful implementation of Snowplow, ClearScale needed to be certain that the data collectors and the data enrichers were able to scale independently of one another. Not only would this allow for the varying rates and volumes of data that had to be collected and enriched as needed, but it meant that the client could potentially have fewer collectors with more enrichers active at any one point in time, thus allowing for a quicker turnaround time for cleansed data for analysis. ClearScale went about designing and implementing two separate and independently managed auto-scaling groups to be certain that the client’s cleansed data would be near real-time.

By approaching it in this manner, ClearScale also ensured that any new data types or data sets that needed to be analyzed in the future would take minimal effort by the client to set up. It also gave the added benefit of giving the client more flexibility in creating complex enrichment rules without much effort or custom development without the angst of impacting the overall performance of the solution and architecture in the future.

The Benefits

With Snowplow, ClearScale was able to deliver a solution that allowed the client to stream in numerous data sets every second and have them properly cleansed and enriched very quickly, thereby allowing for quick analysis. Where once it took minutes or potentially hours to analyze data, the client could now do so in a matter of seconds. This near-real-time feedback loop was possible due in large part to how Snowplow has been implemented and leverages AWS Kinesis. With the continued growth of AWS services, the providers of the Snowplow application foresee the continued evolution of the product in the years to come that customers will benefit from.

ClearScale’s ability to quickly analyze the client’s desired need to use Snowplow Analytics has given the client the ability to quickly analyze data without worrying about how much data is needing to be processed. This in turn means that the client has an advantage over the competition in being able to identify in near real-time news and media events that are important to its customer base.

ClearScale’s time-proven results have demonstrated our ability to assess a client’s true needs, design and implement solutions that are robust and scalable, and serve in the role of true partners in whatever endeavor your organization wants to undertake.

Learn more about ClearScale’s data and analytics services here.