Dynamic Orchestration Workflow Using Apache Airflow

May 7, 2018

Data is the undisputed ruler that drives everything from business strategy and decisions to near real-time algorithmic workflows. In a data-rich world, capturing that information and processing it is a monumental task, even when a clearly thought out architectural approach is implemented. When new data sources are found that require data extraction, transformation, and loading (ETL) into a data store, often that activity is done with command-line requests, scheduled cron jobs, or scripts that call scripts to achieve this activity. The complexity of legacy cron jobs or scripts can make processing data harder as the business demands greater visibility to analytics or patterns in the data that will help drive system behaviors to respond to outside stimuli.

It’s this complexity that ultimately prevents companies from being able to utilize, react, or proactively execute behaviors designed to meet the needs of the business. The resources necessary to author, schedule, maintain and troubleshoot ETL jobs is a highly inefficient one and even worse when the complexity of the ETL cron jobs and scripts that have grown up reactively over time serve as an impediment to progress. One company in the hospitality industry discovered how true this was when they realized that in order to effectively scale and maintain the data stores they had to achieve the functional strategies they had they would need a more effective solution.

The Challenge – Implementing Scalable Orchestration Framework

Having heard of Apache Airflow, the client approached ClearScale, an AWS Premier Consulting Partner, to see if this dynamic triggered workflow product would suit their growing needs. Having already deployed a distributed data acquisition layer to pull in data sources from third parties based on Python and Celery, and storing that data in a PostgreSQL data store leveraging an internal orchestration framework, the client needed a more robust methodology to evolve their business as well as an extensible framework that would allow additional data sources in the future to be added without an excessive amount of work to integrate them.

Specifically, they needed to find and implement a scalable orchestration framework that was much more dynamic and automated than what they already had, which could trigger other workflows based on the data that was analyzed. Moreover, they needed to elevate their ability to manage and monitor workflows through visual UI components instead of command-line interfaces which would ideally allow them to actively monitor and respond to issues quickly.

As an open-sourced tool, Apache Airflow gives the ability to orchestrate complex computational workflows. Using a visual configuration user interface, an administrator can design a workflow with a directed acyclic graph (DAG). This allows for a division of tasks that can be executed independently, either concurrently or in parallel, and provides a method whereby workflows can be dynamically triggered based on either on what data is ingested or results stemming from an analysis of the data. This means that the complexity of data processing and analysis can be easily designed and managed for optimum processing.

The Solution – Apache Airflow

ClearScale determined that the best approach to determining if Airflow would suit the client’s needs would be to create a Proof-of-Concept (POC). Although AWS provides a service called Step Functions that does provide a methodology for importing data sets for analysis, when it comes to overly complex workflows, it falls short of what Airflow has to offer. Knowing that the client needed to create workflows with thousands of data collection tasks running in parallel with tasks that needed to run dynamically based on previous results run just prior, ClearScale performed an in-depth analysis of the tool. The initial investigation showed that although Airflow could process a small sample set of coarse-grained tasks, it fared poorly with a larger subset of finer-grained tasks. This was especially seen when interacting with the management user interfaces; they quickly became unresponsive when dealing with hundreds of parallel tasks.

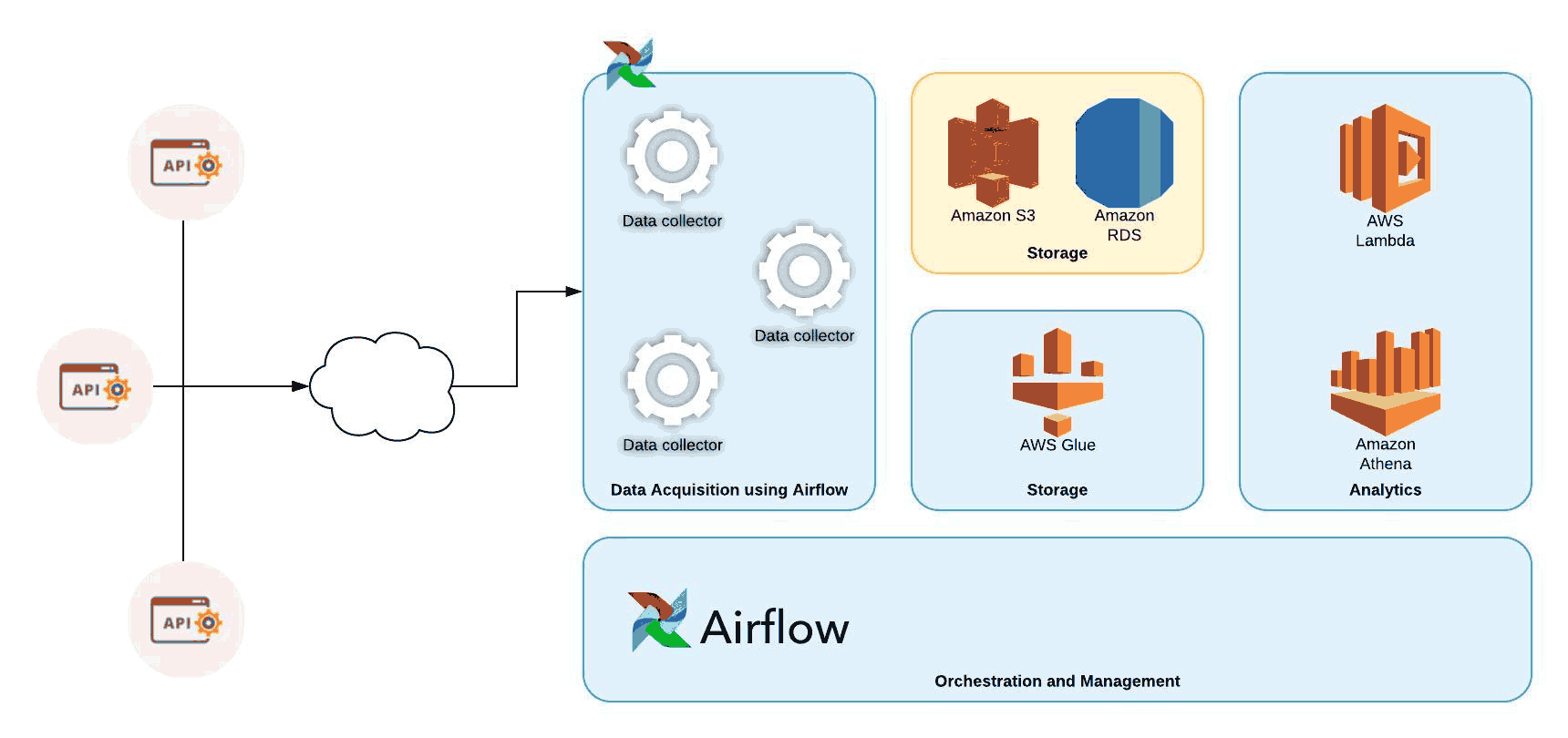

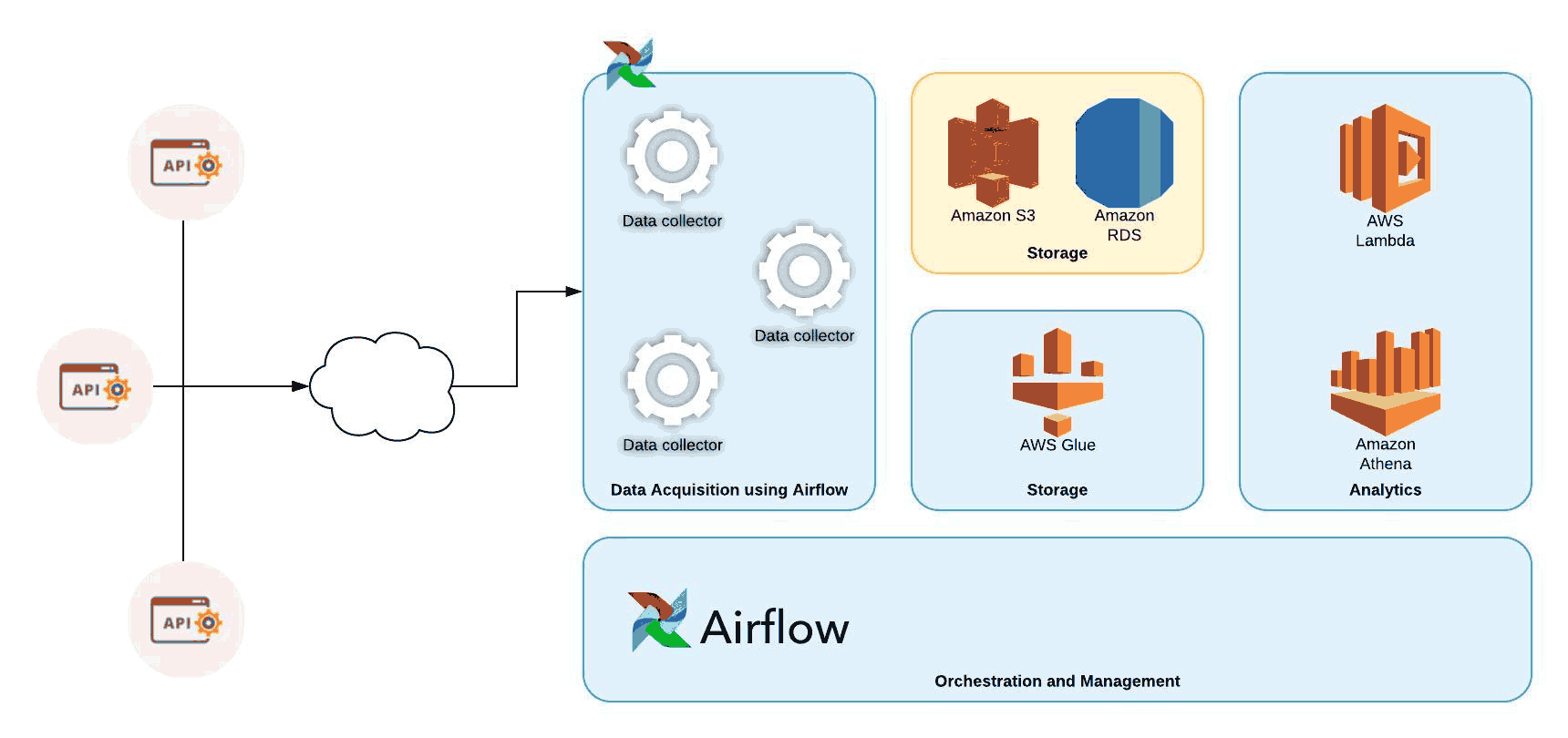

To overcome this, ClearScale built an AWS service architecture around Apache Airflow that allowed for several prototype dynamic workflows based on the requirements from the client without the detrimental effects of a straight implementation of Airflow. The solution provided a data-acquisition framework utilizing Kinesis. ClearScale also ran performance measurements and reports to determine the viability of the PoC and determined that the viability of the approach would suit the needs of the client.

Apache Airflow Diagram

The Benefits

The AWS-based proof-of-concept demonstrated that the architectural approach around Apache Airflow that ClearScale took allowed for the data acquisition framework would be able to scale to accommodate large data volumes. It also showed that adding additional data sources would require minimal development resources, thus making the solution much more extensible compared to the home-grown solution the client had maintained previously. Finally, from a maintainability perspective the ClearScale solution the visual user interfaces allowed system operators to quickly discover and resolve issues as they arose, such as processing jobs failing due to issues arising from data schema and format issues from new data sources.

ClearScale’s expertise in merging AWS Services with third-party products to make robust and scalable solutions for our clients has served our customer base well since 2011. While AWS-only implementations often can serve our clients well, there are times when certain AWS services do not provide a comprehensive solution to meet their needs. This means that a hybrid solution that takes off-the-shelf technologies and merges them with a robust AWS architecture is often the best approach to dealing with complex business situations. Placing your trust in a partner like ClearScale means that whatever your business needs you can rest assured that the final deliverable will meet and exceed your requirements.