Building Next-Gen Applications with Serverless Infrastructure

Mar 14, 2023

By Anthony Loss, Director of Solution Strategy, ClearScale

The cloud has completely changed the game when it comes to building applications. Engineering teams don’t have to settle for monolithic apps or hard-to-manage infrastructure anymore. And with the right cloud service vendor (our choice: Amazon Web Services), making the jump into the modern era of application building is easy.

In this post, we’ll explain why legacy applications fall short and how teams can upgrade the services they offer. We’ll also answer some of the most common questions we receive on this topic to give you confidence that the right serverless solution is out there.

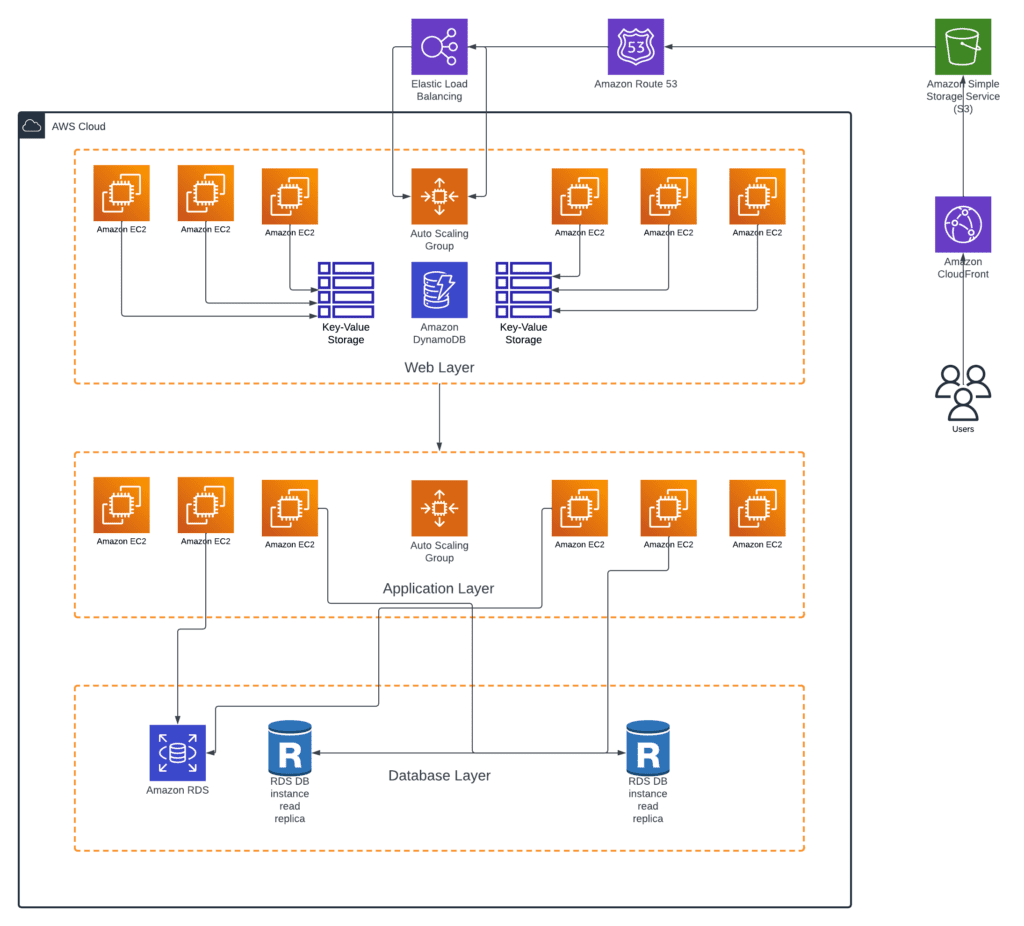

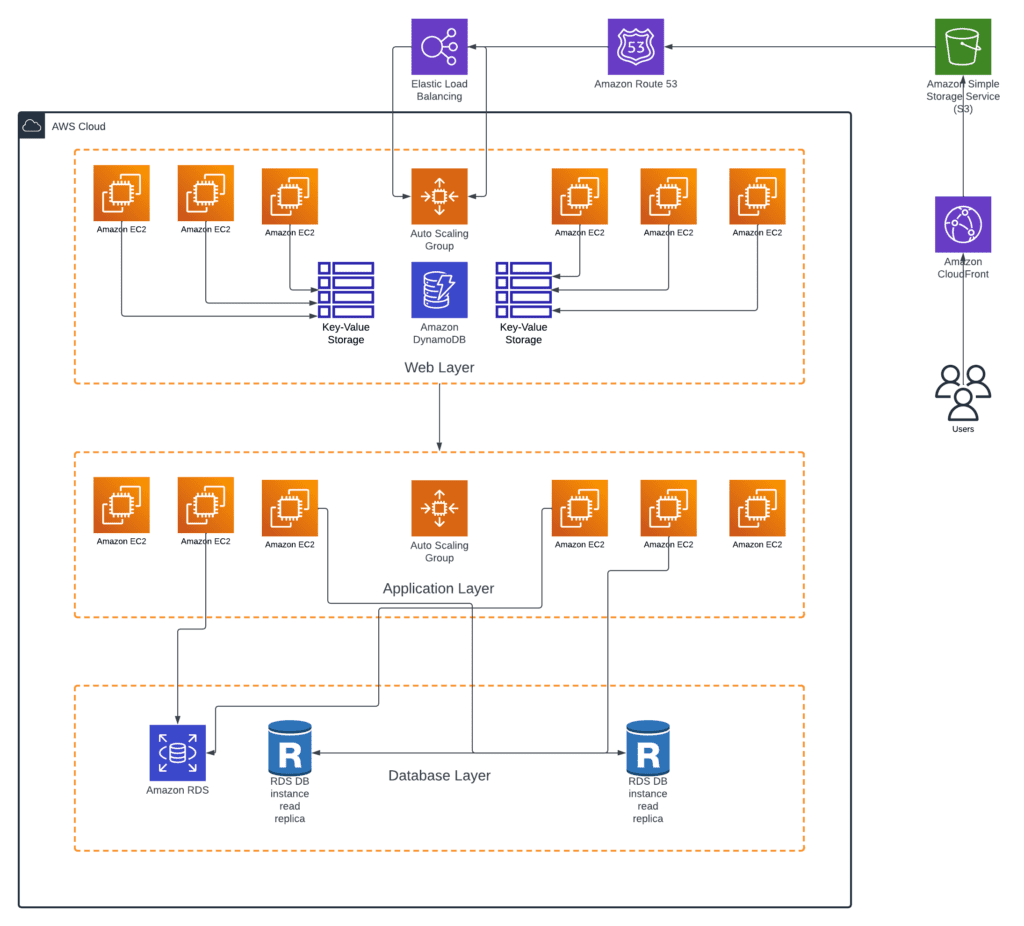

Stateless vs. Stateful Architecture Designs

Monolithic applications have traditionally depended on stateful architectures in which user session information is stored on a server rather than on external persistent database storage. This server-based session storage gets in the way of modern application design.

The problem with the stateful session storage is that session information can’t be shared across multiple services. Once a user establishes a connection to a particular server, they have to stick with it until the transaction completes. IT teams also can’t perform “round-robin” requests to distribute traffic across multiple services, and applications can’t scale horizontally.

Fortunately, there is a better model that leverages stateless architectures. If companies put user session information on persistent database layers rather than on local servers, state can be shared between microservices. This unlocks a wide range of performance benefits and the potential to leverage service-oriented architectures (SOA).

A stateless application architecture

Service-oriented Architecture (SOA)

Service-oriented architectures (SOAs) use components called “services” to create business applications. Each service has a specific business purpose and can communicate with other services across various platforms and languages. The goal of an SOA model is to implement loose coupling, enabling teams to optimize, enhance, and scale services individually. But the challenge with SOA is that it requires more nuanced governance.

Simple Object Access Protocol (SOAP) and Representational State Transfer (REST) are the two primary service architectures used to implement SOA with appropriate governance. SOAP is older, heavier, and relies on XML for data exchange. REST is newer, more efficient, and allows JSON, plantext, HTML, and XML data exchanges. The good news is that going the REST route is easy on AWS with a tool like Amazon API Gateway.

Amazon API Gateway gives engineers the ability to put RESTful endpoints in front of Lambda functions and expose them as “microservices.” Going stateless with REST sets the stage for game-changing microservices-based applications.

Microservices Best Practices

The clear advantage of microservices is that discrete business functions can scale and evolve independently with dedicated IT infrastructure. Engineers can also maintain a much smaller surface area of code and make better choices when it comes to supporting individual services.

Best practices for designing microservices architecture include:

- Creating a separate data store so that individual teams can choose the ideal database for their needs

- Keeping servers stateless to enable scalability

- Creating a separate pipeline for each microservice so that teams can make changes and improvements without impacting other services that are running

- Deploying apps in containers to ensure standardization across services

- Using blue-green deployments to route small percentages to new service versions and confirm that services are working as expected

- Monitoring environments continuously to be proactive in preventing outages

When all of this is done in a serverless fashion, engineers have much less infrastructure to manage themselves. They can focus more on application code and less on operating systems, server specifications, autoscaling details, and overall maintenance. Serverless microservices with stateless session storage via REST are the core ingredients of next-gen applications.

With that being said, there are aspects of this strategy that can be confusing to new adopters, such as the need for containers and how to deploy event-driven applications. We’ll touch on these concerns next.

Why Do We Need Containers If We Have Instances?

When educating clients on the benefits of serverless infrastructure and containers, we’re often asked why we need containers at all. Don’t instances already provide isolation from underlying hardware? Yes, but containers and Docker, specifically, provide other important benefits.

Docker allows users to fully utilize VM resources by hosting multiple applications (on distinct ports) on the same instance. As a result, engineering teams get portable runtime application environments with platform-independent capabilities. This allows engineers to build an application once and then deploy it anywhere regardless of the underlying operating system.

Deployment cycles are also faster with containers, and it’s easier to leverage infrastructure automation. Furthermore, it’s possible to run different application versions simultaneously, as well as package dependencies and applications up in a single artifact.

What Happens If a Particular Service Goes Down?

Good question. We recommend using a tool like Amazon SQS, which is a messaging queuing service designed specifically for serverless applications.

Such queue-based architecture prevents potential data loss during outages by adding message queues between services (REST endpoints). These queues hold information on behalf of services.

What about Event-driven Architecture?

Implementing event-driven architecture can work for serverless infrastructure through either a pub/sub model or an event stream model. With the pub/sub model, when events are published, notifications go out to all subscribers. Each subscriber can respond according to whatever data processing requirements are in place. The service we recommend for this is Amazon SNS, a fully managed pub/sub service designed for serverless applications.

On the event stream model side, engineers can set up consumers to read from a continuous flow of events that come from a producer. For example, this could mean capturing a continuous clickstream log and sending alerts if any anomalies are detected. We turn to Amazon Kinesis for this type of functionality.

Go Serverless with ClearScale

For those who have other questions or want to learn more about how to deploy and optimize serverless infrastructure on the AWS cloud, we’d love to hear from you. We work with clients across many industries to build next-gen applications that are efficient, scalable, and reliable. Our cloud engineers have extensive experience with all the methods and tools described above so that we can hit the ground running on any project.

Schedule a call with us today to share more about your serverless project and learn how we can make it happen. You can also watch this free on-demand webinar to go deeper on your own time.

Get in touch today to speak with a cloud expert and discuss how we can help:

Call us at 1-800-591-0442

Send us an email at sales@clearscale.com

Fill out a Contact Form

Read our Customer Case Studies