Best Practices for Kubernetes on AWS – Takeaways from re:Invent 2020

Dec 18, 2020

AWS re:Invent 2020 was jam-packed with cloud insights, tips, and demonstrations. We especially enjoyed learning more about how to leverage Kubernetes and optimize infrastructure for containerized applications on AWS.

Our Technical Lead from the Systems Engineering team, Alexander Ivenin, sat in on sessions that covered a wide range of Kubernetes-related topics, including how to migrate legacy applications to containers and how to operate Kubernetes clusters with Amazon Elastic Kubernetes Service (EKS). We also learned best practices for how to integrate EKS with other AWS products to enhance security and performance.

In this post, we’ll pass on wisdom from re:Invent 2020 and share the many reasons why you should consider Kubernetes on AWS for your containerized applications. We’ll also leave you with a handy reference list of all the Kubernetes best practices we’ll be following in 2021.

A Brief Kubernetes Introduction

Kubernetes was released in 2014 on the heels of the microservices movement. Microservices architecture remains popular today for modern app development because it enables developers to create loosely coupled services that can be developed, deployed, and maintained independently.

Once Kubernetes hit the scene, developers had a way to easily manage containerized applications (i.e., those built using microservices architecture). On top of that, the software tool helps IT teams manage the full lifecycle of their modernized applications.

Today, the question many are facing is how to migrate legacy applications into containers that thrive on the cloud. Fortunately, there are many compelling reasons and useful tools for ensuring migration success.

Why Migrate Legacy Applications to Containers

There are many advantages to migrating legacy applications to containers.

First, containerized apps are more agile. Engineers can develop and deploy applications faster to achieve overarching business goals. The loosely coupled nature of containerized apps allows developers to upgrade, repair, and scale discrete services.

Second, containerized apps are portable. In migrating legacy applications to containers, developers can package everything needed to run applications into one transferable unit.

Third, containerized applications are efficient. They require less operational IT overhead to achieve optimal computing.

Of course, there are some roadblocks. Not all legacy applications will fit neatly into containers. In many cases, engineers have refactor applications to prepare for containerization. In addition, many organizations don’t have the in-house expertise or capacity for this work. It tends to fall to the bottom of the business priority list.

Containerization Migration Approaches

Businesses that do have the time and skills to migrate legacy applications to containers can follow one of several approaches. They can migrate workloads using a lift-and-shift process, which we don’t recommend in most cases. Instead, we suggest leaders modernize their applications with new infrastructure that is designed to thrive on the cloud.

Modernizing legacy applications during a migration can be a complex endeavor, but the effort is well worth the investment if executed correctly. Assuming that modernization is possible, IT teams can follow the steps below to migrate apps to microservices-enabled infrastructure:

- Take inventory: Gather all information about network communications, protocols, security, configuration and logs, connection strings, storage data, sessions, and scheduled tasks for your target application.

- Prepare for containerization: Use the twelve-factor methodology to guide you in porting between environments and migrating to the cloud. See AWS App2Container for more information on how to containerize your Java or .NET applications to run on EKS.

- Build and publish your containers: Learn how to build docker containers from Dockerfile and add them to the container registry. You can automate your building and publishing processes with tools like AWS CodePipeline.

- Launch your application in a microservice environment: Run your image in AWS Fargate, AWS Elastic Container Service, AWS EKS, or a Kubernetes cluster on EC2 instances.

Optimizing Operations Around Your Containers

Once your containers are up and running, you have to think about how to optimize infrastructure for day-to-day operations. Fortunately, AWS makes this easy with services like Amazon CloudWatch.

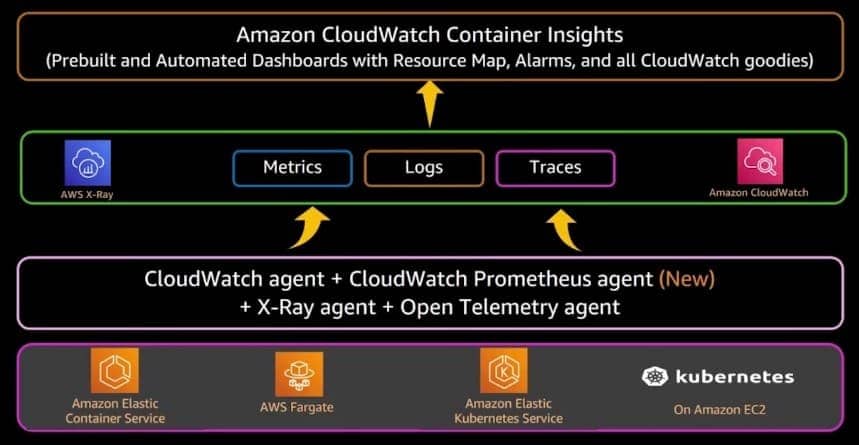

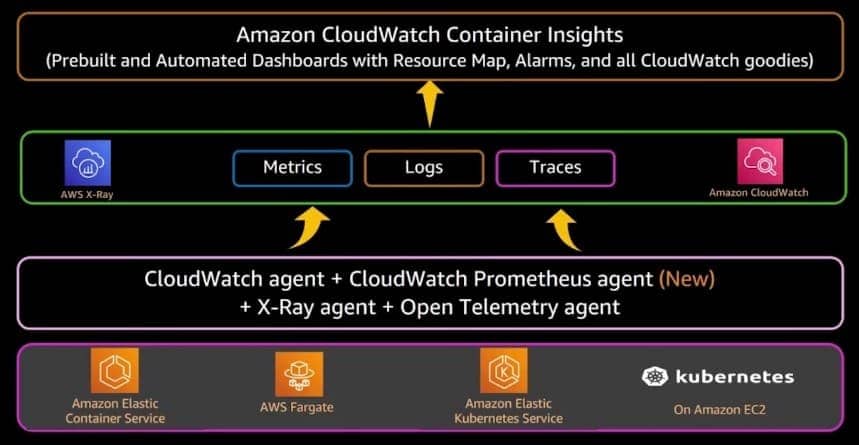

CloudWatch enables users to collect metrics, logs, and traces from applications and store them using various agents. AWS has even created container-specific tools within CloudWatch, like CloudWatch Container Insights. The service empowers users to aggregate metrics and logs for containerized applications and comes with prebuilt dashboards, including resource maps and alarms for dynamic monitoring. With CloudWatch, users have a comprehensive view into the performance of their containerized applications, which means they can also troubleshoot issues quickly by drilling down into specific components.

Bolstering Container Security

Securing containerized applications is crucial and requires a different approach than how you would secure virtual machines. You have to think about all of the layers of your environment, beginning with your hosts. Then, you can secure your container images and pods at runtime.

If you implement AWS EKS, you can take advantage of the tool’s managed service capabilities, which make security much easier. AWS will actually manage your Kubernetes control plane on your behalf, including securing all control plane components.

You have two options for how EKS will manage your nodes: managed node groups and AWS Fargate. For managed node groups, you can use your own AMI and take the security responsibility on yourself. Or, you can go the Fargate route. Your nodes will be abstracted away, and managed by AWS.

In general, we recommend outsourcing whatever responsibilities you can to AWS, so that your team can focus on higher-value activities.

Kubernetes Best Practices By Infrastructure Layer

Beyond the areas highlighted above, there are many other things to keep in mind when using Kubernetes on AWS, all of which are made easier with AWS EKS. Below is a best practices reference guide categorized by infrastructure layer to help you maximize the performance of your containerized applications.

Host Security

- Use a container-optimized OS

- Deploy workers onto private subnets

- Minimize and audit host access regularly

- Use Amazon Inspector to automate security and compliance assessments

- Use SELinux to set access control security policies

Image Security

- Store as few images as possible

- Remove unnecessary packages

- Scan container images for vulnerabilities regularly

- Remove SETUID and SETGID bits from image files or remove those files entirely

- Avoid running applications as a root user

- Use private endpoints to access your container registry

Identity and Access Management (IAM)

- Use a separate service account for every application

- Map service accounts to AWS Identity and Access Management (IAM) roles

- Set minimal necessary permissions

- Disable mounting for default service account tokens

- Block access to EC2 metadata

EKS

- Privatize cluster endpoints

- Audit access to clusters regularly

- Follow the Principle of Least Privilege for Kubernetes RBAC

Pod and Security Runtime

- Use pod security policies or an Open Policy Agent with Gatekeeper

- Implement the following runtime security measures:

- Deny privilege escalation

- Deny running as root

- Deny mounting hostPath

- Drop Linux capabilities

- Set exclusions when appropriate

- Complement pod security policies with AppArmor or seccomp profiles

Auditing and Forensics

- Enable control plane logs

- Stream logs from containers to an external log aggregator

- Audit the Kubernetes control plane and CloudTrail logs for suspicious activity regularly

- Isolate pods immediately if you detect suspicious activity

Network Security

- Begin with a deny-all global policy and gradually add allow policies as needed

- Use k8s network policies for restricting traffic within Kubernetes clusters

- Restrict outbound traffic from pods that don’t need to connect to external services

- Encrypt service-to-service traffic using a mesh

Optimize Kubernetes on AWS with ClearScale Today

To summarize what we’ve covered, AWS EKS is a valuable tool for scaling Kubernetes applications in the cloud. The service integrates seamlessly with other AWS products, enabling development teams to deploy high-performing applications.

On top of that, Kubernetes has a fast-growing support community and clear best practices for maintaining clusters and applications, including those that leverage automation for continuous deployment and security.

Finally, Amazon continues to invest heavily in its Kubernetes services and capabilities. There is no cloud provider in a better position to support your containerized applications. If you are considering migrating microservices to the cloud, choose AWS EKS.

For those who have additional questions about how to make the most of Kubernetes deployments, schedule a free consultation with one of our cloud experts today.